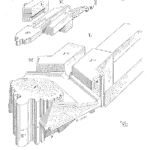

Safety showers and eyewash stations are installed when dangerous goods are present. The shower installation has to meet recognised standards like American National Standard Z385.1. This article notes the key requirements for safety shower installations and discusses some practical issues. [Read more…]