Kirk Gray, Accelerated Reliability Solutions L.L.C.

Traditional electronics reliability engineering began during the period of infancy in solid state electronic hardware. The first comprehensive guide to Failure Prediction Methodology (FPM) premiered in 1956 with the publication of the RCA release TR-1100: “Reliability Stress Analysis for Electronic Equipment” presented models for computing rates of component failures. “RADC Reliability Notebook” emerged later in 1959, followed by the publication of a military handbook know as that addressed reliability prediction known as Military Handbook for Reliability Prediction of Electronics Equipment (MIL HNBK 217) . All of these publications and subsequent revisions developed the FPM based on component failures in time for deriving a system MTBF as a reference metric for estimating and comparing the reliability of electronics systems designs. At the time these documents were published it was fairly evident that the reliability of an electronics system was dominated by the relatively short life entitlement of a key electronics component, vacuum tubes.

In the 21st century, active components have significant life entitlements if they had been correctly manufactured and applied in circuit. Failures of electronics in the first five or so years are almost always a result of assignable causes somewhere between the design phase and mass manufacturing process. It is easy to verify this from a review of root causes of verified failures of systems returned from the field . Almost always you will find the cause an overlooked design margin, an error in system assembly or component manufacture, or from accidental customer misuse or abuse. These causes are random in occurrence and therefore do not have a consistent failure mechanism. They are not in general capable of being modeled or predicted.

There is little or no evidence of electronics FPM correlating to actual electronics failure rates over the many decades it has been applied. Despite the lack of supporting correlating evidence, FPM and MTBF is still used and referenced for a large number of electronics systems companies. FPM has shown little benefit in producing a reliable product, since there has been no correlation to actual causes of field failure mechanisms or rates of failure. It actually may result in higher product costs as it may lead to invalid solutions based on invalid assumptions (Arrhenius anyone?) regarding the cause of electronics field failures.

It’s time for a new frame of reference, a new paradigm, for measurement for confirming and comparing the capability of electronics systems to meet their reliability requirements. The new orientation should be based on the stress-strength interference perspective, the physics of failures, and material science of electronics hardware.

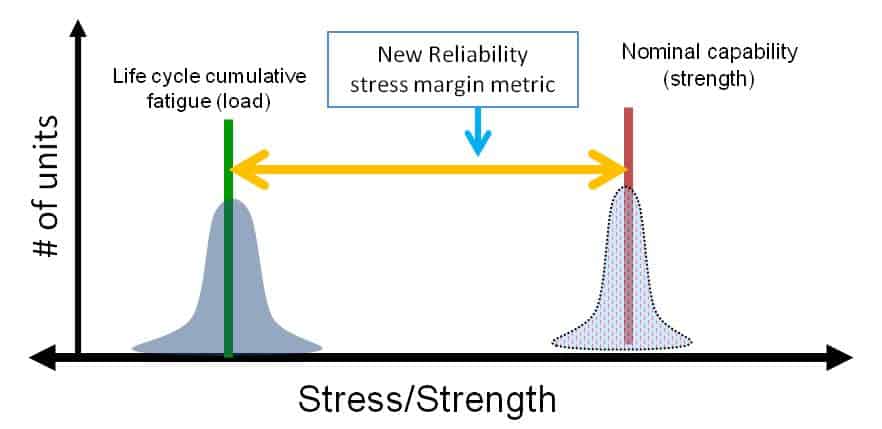

The new metric and relationship to reliability is illustrated in a stress-strength graph as shown in figure 1. This graphic shows the relationship between a systems strength and the stress or load it is subjected to. As long as the load is less than the strength, no failures occur.

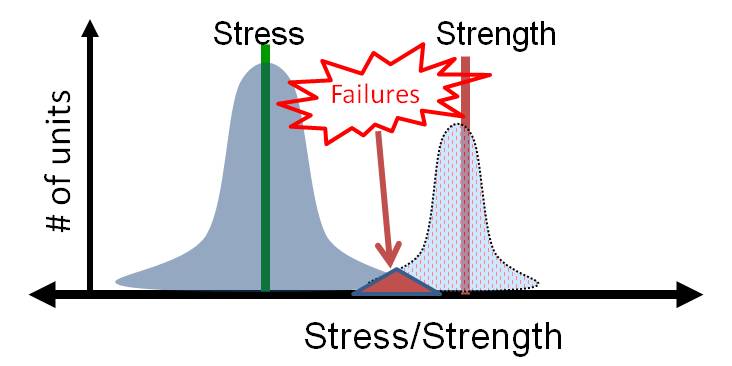

In the stress-strength graph in figure 2, anywhere the load to a system exceeds the system’s strength is where the two curves overlap and failures occur. This relationship is true for bridges and buildings as well as electronics system.

This relationship between stress and strength and failures correlates with our common sense understanding that the greater the inherent strength a system has relative to environmental stress, the more reliable it will be. We can refer to the space between the mean strength and the mean stress as the reliability margin shown in figure 3.

It is similar to a safety margin except with an electronics assembly we derive the mean of the strength entitlement of standard electronics PWBA materials. The mean strength can be found relatively quickly in a stepped stress limit evaluation (a.k.a. HALT) compared to long duration estimated worst case stress simulation testing, where the fundamental goal is to establish a time-to-failure metric.

Of course the balance is that we must consider the competitive market and build the unit at the lowest costs. What is probably not be known to most electronics companies is how strong standard electronic materials and systems can actually be in relation to thermal stress, since so few companies are actually testing to thermal empirical stress operational limits, with thermal protection defeated. Many complex electronics systems can operate from -60°C or lower to +130 °C or greater using standard components. Typically it is only one or two components that keep a system from reaching stress levels that are at the fundamental limit of technology (FLT). The FLT is the point at which the design capability cannot be increased with standard materials. Sometimes designs have significant thermal operating margins without modifications, which can be used to produce shorter and more effective combined stress screens such as HASS (Highly Accelerated Stress Screens) to protect against manufacturing excursions that result in latent defects.

In most applications of electronics systems, technological obsolescence comes well before components or systems wear out. For most electronics systems we will never empirically confirm their total “life entitlement” since few systems are likely to be operational long enough to have “wear out” failures occur. Again it is important to emphasize that we are referring more to the life of solid state electronics and less to mechanical systems where fatigue and material consumption results in wear out failures.

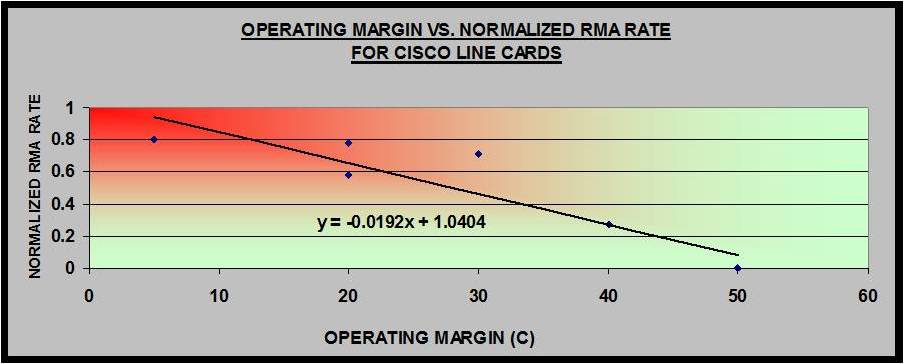

Reliability test and field data are rarely published but there is one published study with data showing a correlation between empirical stress operational margin beyond specifications and field returns. Back in 2002, Ed Kyser, Ph.D., and Nahum Meadowsong from Cisco Systems gave a presentation titled “Economic Justification of Halt Tests: The relationship between operating margin, test costs, and the cost of field returns” at the IEEE/CPMT 2002 Workshop on Accelerated Stress Testing (now the IEEE/CPMT Workshop on Accelerated Stress Testing and Reliability, ASTR). In their presentation they showed the graph of data on differences in thermal stress operational margin versus the normalized warranty return rate on different line router circuit boards as is shown in Figure 4.

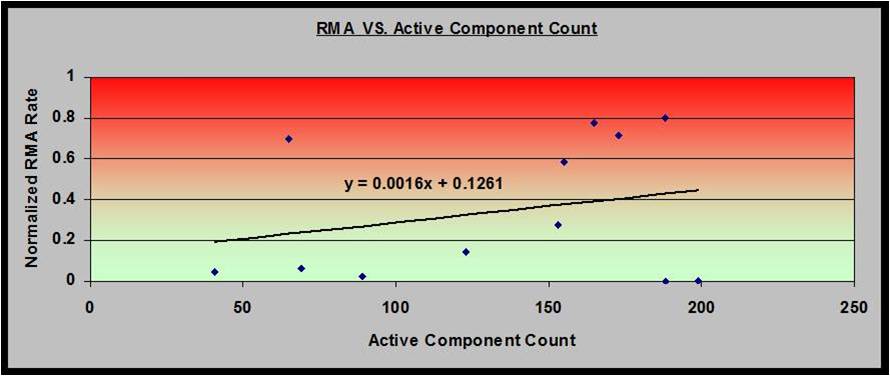

The graph shows the correlation between the thermal margin and the RMA (Return Material Authorization), i.e. the warranty return rate. A best fitting curve with this scatter diagram shows a probabilistic relationship between thermal operational margin and warranty returns. It indicates that the lower the operational margin, the higher probability of its return. Cisco also compared the relationship between the number of parts (on a larger range of product) and the return rate. The graph of that data is shown in figure 5. The relationship between thermal margins versus return rates is ten times stronger than the relationship between board parts counts versus return rates.

This makes sense from a stress-strength relationship. No matter how long a chain is, it is only as strong as the weakest link in that chain. No matter how many parts on the PWBA, the designs tolerance to variation in manufacturing and end-use stress is dependent on the least tolerant part.

For operational reliability or “soft failures” in digital electronics, the relationship between thermal limits and field operational reliability is less obvious since again most electronics companies do discover and therefore do not compare empirical thermal limits with rates of warranty returns. In mass production of high speed digital electronics, the variations in components and PWBA manufacturing can lead to impedance variations and signal propagation (strength) that overlap the worst case stresses in the end use (load) leading to marginal operational reliability. It is very challenging to determine a root cause for operational reliability that is marginal or intermittent, as the subsystems will likely function correctly on a test bench or in another system and considered a CND (Cannot Duplicate) return. Many times the marginal operational failures observed in the field can be reproduced when the system is cooled or heated to near the operational limit. Heating and cooling the system skews the impedance and propagation of signals, essentially simulating variations in electrical parametrics from mass manufacturing. If companies do not apply thermal stress to empirical limits, they will never discover and be able to utilize this benefit to find difficult to reproduce signal integrity issues.

Faster and lower reliability test costs are becoming more critical in today’s fast pace of electronics development. Most conventional reliability testing that is done to some pre-established stress above spec or “worst case” field stress takes many weeks if not months, and result in minimal reliability data. Finding electronics systems strength by HALT methods is relatively very quick, typically taking only a week or less to find, and with fewer samples. Even if no “weak link” is discovered during HALT evaluations, it always provides very useful variable data on empirical stress limits between samples and design predecessors. Empirically discovered stress limits in an electronics system design are very relevant to potential field reliability and especially thermal stress an operational reliability in digital systems. Not only can stress limits data be used for making a business case for costs of increasing thermal or mechanical margins, but it can also be used for comparing the consistency of strength between samples of the same products. Large variations of strength limits between samples of a new system can be an indicator of some underlying inconsistent manufacturing processes. If the variations are large enough some percentage will fail operationally because the end-use stress conditions exceed the variation in the products strength.

As with any major paradigm shift, a move from using the dimension of time to the dimension of stress as a metric for reliability estimations, there will be many details and challenges yet to be determined on how best to apply it and use the data derived from it. Yet from a physics and engineering standpoint a new reference of stress levels as a metric has a much stronger potential for relevance and correlation to field reliability than the previous FPM with broad assumptions on the causes of field operational and hardware unreliability in current and future electronics systems. If we begin today using stress limits and combinations of stress limits as a new reference for reliability assessments we will discover new correlations and benefits in developing better test regimens and finding better reliability performance discriminators resulting in improving real field reliability at the lowest costs.

Thanks you Kirk for this article and some of the other articles from you or other authors I could find in the blog.

I’d like to make a comment and ask a question. I’d be glad to get your thought on the comment and answer to the question..

** First of all I’d like to reformulate your article and make sure I do correctly understand ***

If I understand well, the key of this article is the fact that the Time To Failure distribution (or median which is a very limited information from it), that one could get with modeling each failure mechanism, and combining them properly (assuming possible to do so…) is useless, as the failure in electronics system do happen in a time scale which is much below the time scale involved in the product life (several years).

In other words, the system failure causes are not linked to intrinsic limitations of the technology but extrinsic causes. (I here define intrinsic/extrinsic by dependent/not dependent from time of usage).

(“failures of electronics in the first five or so years are almost always a result of assignable causes somewhere between the design phase and mass manufacturing process. “).

As a result using the time dimension to model a none time dependant mechanism is meaningless.

Therefore, it does neither allow improving reliability nor benchmarking technology.

You propose to use another figure of merit, which is the stress-load margin.

This allows detecting what could limit the system robustness, and attempt to improve it.

This can also be used for benchmarking.

But, not help getting to to time to failure distribution (but FPM also does not despite it trys to…)

Please let me know if I understand correctly and please bring the adequate corrections.

** One comment **

At device level (I am involved in the reliability of the FET transistor), the picture is different.

Each of the few mechanisms invloved in the FET ageing, we have a given hazardous function, always monotonously increasing (with various shape that we can relate the the physics that stand behind). The fact that they monotonously increase translates the ageing role.

I you guys at system level, do not see any impact of the time dimension, I think it is precisely because the device level engineer build intrinsically reliable devices! 🙂 By “intrinsically reliable” in this sentence, I mean “which for the time under usage for the system, have failures rate much below the rate of extrinsic failures that happen during the system life”.

I write this in order to emphasize that this time-metric approach is, not only useful, but necessary in this context.

Just because the physics that stand behind involve a time dependance.

Things are quite simple in fact : if time does play a role, we should include it in the model/test procedure. If not, like at the system level, of course lets drop it!

One more thing: it seems that you oppose time metric from load-stress margin. Both can play!

And that is the case of FET where time dependence and stress dependence are used in order to extrapolate lifetime distribution.

Which of course fill never be the lifetime of the system or help getting the lifetime of the system, I guess we agree on that.

I does just (and this is not bad!) help making sure that intrinsic lifetime will never be limiting the system lifetime.

** One question **

Assuming that indeed the main failure mechanism that are involved the failure of the system are not time dependent.

Which would push to use to strength-load methodology with HALT test.

At highly accelerated condition, is the weakness that we want the test to reveal still independent of time of stress?

Not obvious to me…

It seems to me a key hypothesis that needs to be verified.

Indeed, if it not the case, the time of stress must then be considered.

In other words, the margin between load and strength should be obtained with accounting the time of stress to get the strength.

This would also introduce the need to be very careful in benchmarking as, despite the stress applied could be the same from a company to another, the time of stress applied could be different.

Many thanks,

Augustin

Augustin, Thanks so much for your very good feedback. I am generally addressing the issue of electronics reliability at the system level in my blogs.

You have done a good job of reformulating the articles key points. I do believe that time to failure can be determined at an individual device level with very specific and well defined physics describing the device and correlated with empirical testing. An individual BGA on a VLSI package can be modeled based on finite element analysis, just as your FET’s can. IF the end use application stresses are known with very good precision and are consistent, then a stress-time degradation model for that one specific BGA can be relevant. Of course the thousands of BGA’s on the same PWB will have wide distributions of stress conditions, and at a system level it is even larger variations of stress conditions. Of course this is also true for FET’s in the many electrical and mechanical conditions on the same PWB and across the systems. The in circuit application is very relevant to the life.

In the application of vibration using multi-axis pneumatic repetitive shock systems, fatigue damage is rapidly induced and is cumulative, so during HALT the time duration of the vibration should be consistent between HALT of the samples and can give a relative scale of how weak a mechanical weakness in the system may be, but without knowing the field life cycle environmental to some precision an accurate time to failure could not be developed. We could only give a time to failure for the exact conditions of the test, and nothing more. If the field use are known to be very much the same conditions (not assuming they are) then it is possible to estimate a time to failure.

Sometimes a mechanical or electromechanical needs to be quantified for electronics system (i.e. Fans), but in the vast majority of cases when a mechanical weakness is found in testing we can increase the strength or “material reservoir” if material is consumed (bearings), or reduce the driving stress to extend the fatigue life past expected technological obsolescence at only small modifications and costs.

Many Thanks you Kirk for your prompt and detailed response.

Ok, I’m gald that I got your key points and that you’re OK on the validity of the lifetime approach at device level with well identified physics that stand behind.

Ok, you highlight again that HALT is dedicated to detect any weaknesses and improve its reliability.

But not do extract lifetime (only when the stress is in the exact same condition, which is not anymore Accelerated then…)

HALT is done once the system does exist. But I am sure that you agree that reliability must be taken into account well before the system is designed and fabricated!

Therefore the engineers need to have reliability inputs to guide them in their choice.

I guess we agree to this point. do we?

Today the engineers in charge of buying electronic components choose based on what the manufacturers provide to them (well I guess…), ie mainly lifetime for a given mission profile.

Would you an interest that manufacturers provide stength-load data rather than lifetimes?

Or rater, since one does not exclude the other, both lifetime AND strength-load data?

Thanks,

Augustin

Augustin, I think we have a pretty good agreement on the basic points I am making for systems.

What reliability specifications does your company provide on the FET’s you mention.

Do you all provide a FIT rate, or MTBF? Do you have lifetime estimates for different voltage and temperature conditions? Do you all provide an Absolute Maximum specification for use conditions as well as recommended use conditions?

I think the lifetime and stress-strength load data could be provided, but few component suppliers would be willing to publish empirical operation and destruct limits from a competitive and liability standpoint.