Why Doesn’t Product Testing Catch Everything?

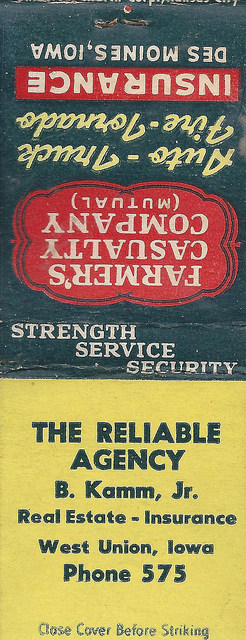

West Union, Iowa, The Reliable Agency, B. Kamm, Jr., Matchbook, Farmers Casualty Company

In an ideal world the design of a product or system will have perfect knowledge of all the risks and failure mechanisms. The design then is built perfectly without any errors or unexpected variation and will simply function as expected for the customer.

Wouldn’t that be nice.

The assumption that we have perfect knowledge is the kicker though, along with perfect manufacturing and materials. We often do not know enough about:

- Customer requirements

- Operating environment

- Frequency of use

- Impact of design tradeoffs

- Material variability

- Process variability

We do know that we do not know everything we need to create a perfect product, thus we conduct experiments.

We test.

Testing Roles

Testing or running experiment provides information. For reliability engineering anytime we learn about what fails or when failures occur we have the opportunity to design out failure mechanisms. We also have information that is useful to estimate the number of failures that will occur.

Testing takes many forms and addresses different questions, such as:

- Functional testing – does it do what we want it to do?

- Environmental testing – will it work over the range of expected environments?

- Regulatory testing – will governing agencies approve the device?

- Reliability testing – will it work long enough or with low enough failure rate?

Testing refines our knowledge by reducing the area of uncertainty. Well crafted experiments allow us to shed a little light into those imperfect regions of our knowledge.

What is perfect testing?

If we follow this logic that conducing testing provides information, then if we do enough testing we will create perfect information.

Well, no.

First. we have to design the testing. We are straddled with the same imperfect knowledge with this design challenge. If only we could construct testing that would reveal what we do not understand, we could learn what we need to know.

Second, testing is commonly done during development on a sample which is expected to contain information about the population of items not yet created. Beyond the sampling from a population statistical uncertainties, there are often real differences between what we test and production units. For example, do you box up and ship your products from your distribution center to your lab using all possible routes? Probably not. While moving a sample may not materially change the product, it has occurred on more than one occasion that it is important. What else are you assuming is not important?

Third, are we measuring what we need to measure to detect what we do not know impacts the design’s performance? Not sure I can word that clearer — a challenge with my abilities with the language — anyway, we cannot detect something unless we are looking for it. And, when we do not know what we’re seeking it is difficult to recognize when a subtle symptom appears.

Forth, sometimes an important failure mechanism is masked or hidden by other less important failure mechanisms. The simplest example is the difference in the accumulation of damage. In testing if we attempt to accelerate the damage on a brake pad by using it more often and with higher loads, we may completely miss the damage of corrosion or material degradation. Both degrade braking force and the time to failure, yet without time to degrade during an aggressive test it is not prevalent in the test results. While in use the failure mechanisms may switch places on which causes the eventual failure.

We do not conduct perfect testing.

Is testing worth the effort?

Sometimes. When done with forethought on what is a and isn’t known. When the experiment has clear objectives and the results are useful for upcoming decisions. Plus, when the testing focuses on allowing us to explore what we do not know we do not know.

Leave a Reply